How to Analyze the Effectiveness of Your Content

Building a machine learning-based automated content tagging service with web frameworks

Publishers and marketers struggle with answering questions such as what digital content is responsible for acquiring, engaging, or converting users. While part of the problem is related to attribution, a bigger issue is classifying the content topics and attaching them to the right taxonomy. You cannot track what you cannot classify, and you cannot measure what you cannot track.

When dealing with large volumes of content, the process of manual tagging by authors is inconsistent and burdensome. AArete Technology Services developed a proprietary ML-based content tagging service to automate this process. This blog discusses the business case and the approach to solving this increasingly complex problem.

Background

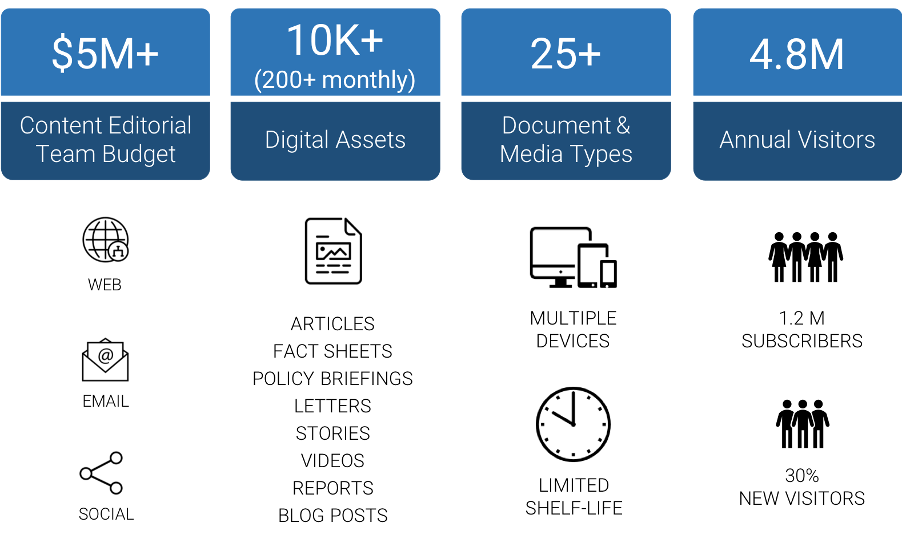

Consider a special interest publisher with 12 publications across the country reaching more than 1.2 million readers each month. The publisher generates 200+ digital assets per month including articles, fact sheets, policy briefings, stories, videos, reports, and blog posts. The digital content is published via a content management system and available for consumption via the publisher’s website and a native mobile app. Preview content is also pushed to social media, email, and other websites for subscriber acquisition and engagement. The content editorial team has a budget of $5M+ annually.

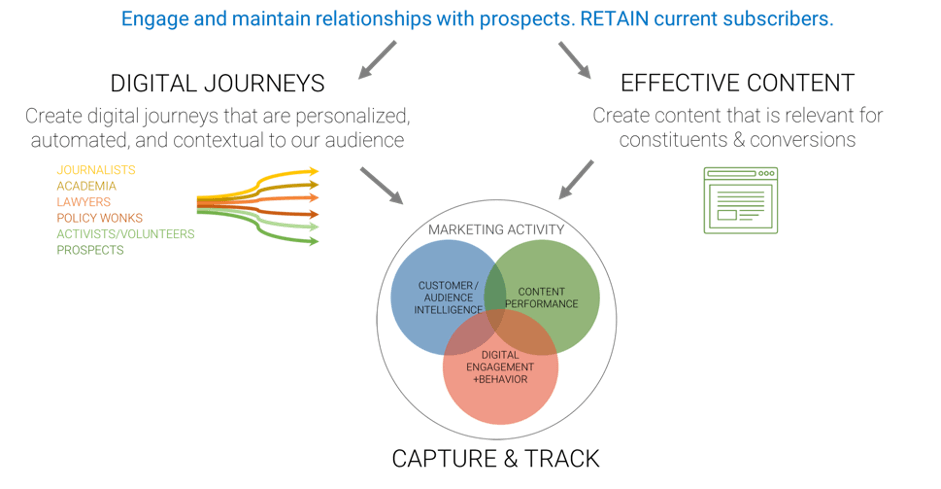

The VP of Digital Strategy was asked the following questions: what is the return on investment on the content we are creating? Which content helps us to sustain current subscribers and to attract and convert new ones? Corollary questions were related to customer/prospect segments, source channels, advertising effectiveness, etc.

AArete Technology Services was brought in to help with the strategy, and technology implementation, to answer these questions.

Problem # 1: OOTB Google Analytics (GA)

You can’t track content consumption unless you have a very well designed GA implementation. We’ve done quite a few data projects ingesting and populating data from the Google Analytics 360 suite. A subsequent blog will discuss how we implemented GA, the Tag Manager, and tweaked the Attribution model to track usage.

Problem # 2: A Shaky Content Metadata and Taxonomy

In order to determine what is the RoI, you have to first identify the content. While authors filed blogs and articles under one of the core issues that the publisher focused on, this tagging was not standardized, and in some cases incomplete. This blog focuses on how we tackled this problem.

The Case for Content Tagging

Most content management systems used a structured lexicon of metadata terms and keywords. With the right content tagging, a structured taxonomy, visitor tracking (and user preference management), you can:

· Track how your audience is engaging with your content

· Design personalized customer journeys that show the content that are of most interest to them

· Provide reliable search results

· Create content that is relevant for constituents & conversions (thus completing the virtuous cycle of create, track, improve)

If your organization has multiple digital properties, it might be important for you to be able to share the tags from the same taxonomy instead of different authors and groups creating their own tags.

The Challenges of Content Tagging

Tagging, especially in a federated content generation model (think blog writers) is subjective, and prone to errors or gaps. Some organizations tackle this by creating metatags manually after the fact but again this is a tedious and expensive process.

Some assets might need detailed tagging while others not so much. Too many tags can saturate the search results while too few might not provide the level of personalization for your users that you’re aiming for.

So what constitutes good content metadata?

· The asset must be tagged with relevant keywords

· The number of keywords must be exhaustive enough to support search

· These keywords must relate back to the taxonomy and core issues by which we will report usage/consumption

· To get maximum social media traction, it may be necessary to include “trending” keywords.

Our Approach

From the get-go it was clear to us that we needed to automate the content tagging process, and that the use case was perfect for a machine learning-based solution.

We knew that the publisher had over 10,000 assets developed over the past few years that had been subjected to some after-the-fact tagging; the content editorial team was confident that a majority of these assets had accurate tagging (our data scientist was already starting to salivate at this training dataset).

Our approach was two-fold:

· Develop a recommendation system that prompts the author at the time of editing or publishing the content.

· Create an enrichment system that would tag the content when ingesting into our data lake. The engine would also create relationships to the taxonomy (a predetermined classification of the topics and subtopics).

The idea was to build one ML-based engine that would feed the recommendation and the enrichment systems. The former, required some level of integration with the CMS, while the latter could be developed without any dependency on external systems.

The Technology Deep-dive

To get around these hurdles, we decided to automate the topic attribution for each new content and other untagged content by using previously-tagged historical data. We thus decided to build a semi-supervised learning model using a Guided LDA and an unsupervised learning model using an LDA which are popular algorithms for topic modeling. Our corpus contained previously tagged articles that used a set of tags that were pre-determined. Those tagged articles would serve as training data for our exercise.

We ran the model against our data to test the model and get some insight on the most relevant topics. The image below shows an example of us analyzing some of the clusters to determine the relevant terms by comparing overall frequency (for the whole corpus) versus within the selected topic.

The same model with some enhancements can also be used as a Recommender System to recommend articles for readers or as a Trend Detector to uncover different themes or trends in the articles. Now our next task was to package this feature as a service that can be consumed by third-party applications.

REST APIs and Web Frameworks

In order to package the solution, we decided to build a set of REST APIs to expose this functionality for other third-party systems. We considered Django and Flask as potential options. Django is an older platform and is a full-stack web framework for Python complete with an admin panel, an ORM (Object Relational Mapping), database interfaces straight out of the box.

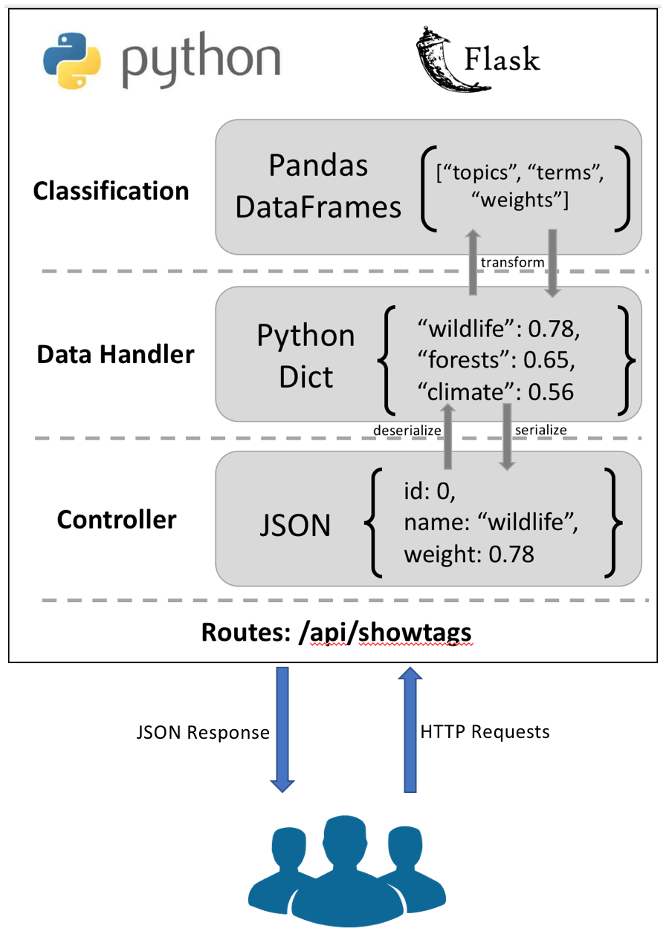

Our focus was on creating simple REST APIs that would be able to package our code as a service and for this purpose the Flask microframework architecture was much more appealing for its lighter footprint. The simplicity of the framework also allows for vast scope for customization and the possibility of adding new services and modules quickly. It enables us to create API which acts as an access point for the model, allowing us to utilise the predictive capabilities through HTTP requests. The initial set of services that we planned to expose were related to suggesting tags for any content in the site. Additionally it would also assign weights to the tags to help us better understand which tags are more relevant to the content and which tags are secondary. We determined an appropriate URL scheme for our service that would assign the endpoints to specific tasks. In the case of the articles we would pass a unique ID for the content so the service could fetch it and generate the suggestions. We would build a data handler that would organize the tags in a list of dictionaries. We would also build a classifier that would leverage Pandas DataFrames to perform any calculations on. The dictionary would eventually be serialized into a JSON format that would include the assigned weights to the tags. The service response could then be easily be consumed by any application.

These services can be consumed by any client via simple http requests. They could even be integrated within a content management system to allow us to suggest tags as soon as an article is published.