What is Azure Synapse Analytics, and What is it Used For?

This is an AArete Digital & Technology solution

6 Best Practices for Maximizing Your Data Insights with Azure Synapse Analytics

Over the last few years, data warehouse architecture has seen a huge shift towards cloud-based data warehouses and away from traditional on-site warehouses when it comes to helping users analyze data. Earlier, huge investments in IT resources were required to set up a data warehouse to build and manage a designed on-premise data center. Today, data warehousing functions are offered as a service (DWaaS) that is accessible via an internet connection. This has negated the costly capital expenditure and management that was earlier required for an on-premise data warehouse.

In this blog post, we will talk about Microsoft Azure Synapse Analytics, which is one of the modern data analytics platforms that is grabbing the attention of companies worldwide.

What is Azure Synapse Analytics?

Microsoft Azure Synapse Analytics is a Platform as a Service (PaaS) offering that helps users create an Enterprise Data Warehouse (EDW) by leveraging powerful Massively Parallel Processing (MPP) architecture.

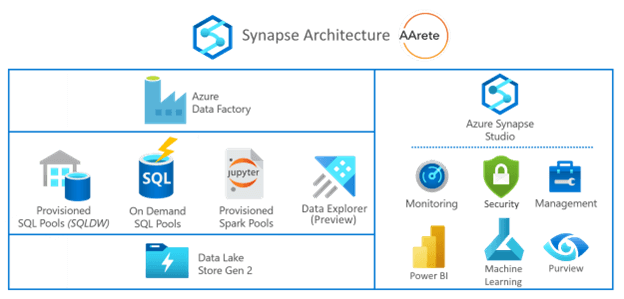

Azure Synapse Analytics can quickly run complex queries across petabytes of data using a flexible architecture that includes on-demand SQL clusters (pools), reserved SQL clusters (provisioned), or Spark pools for a variety of analytical workloads. Along with its capability of processing massive volumes of relational and non-relational data, its compute resources are massively scalable and can be paused in seconds. Microsoft provides Azure Data Factory integration with Synapse to streamline the integration of data processing.

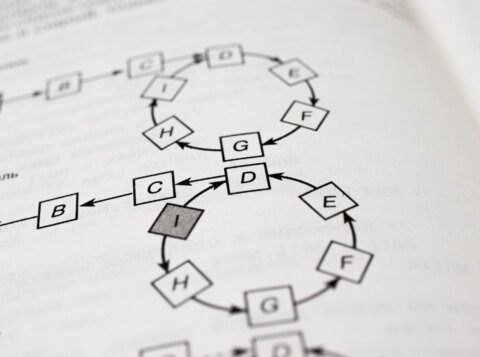

Limitless Data Processing with Synapse Analytics Architecture & Components

Components of the Architecture

Another interesting thing about Azure Synapse Analytics is that you can connect data sources to Azure Synapse Analytics in 3 simple steps executed in preparation, metadata migration, and data migration stages.

Benefits of Migrating to Azure Synapse

One of the key features of Azure Synapse Analytics is that it enables you to use your traditional business intelligence skills to build a data warehouse in Azure. This comes with unmatched computing power in the cloud. Some of the other features built into Azure that can be leveraged by creating an Azure Synapse Analytics warehouse include:

- Smoothly migrate SQL server code with native support for T-SQL in Synapse Analytics.

- Guarantee of 99.9% availability with fully managed analytics service, so you don’t need your DBAs to take care of any of this.

- Built-in advanced security, like connection security, authentication, authorization, and an option to add double encryption of data at rest using customer-managed keys.

- Built-in intelligent caching that helps you accelerate access to your data and query performance.

- Synapse SQL offers the ability to switch between dedicated serverless SQL pools for predictable performance and serverless SQL endpoints for unplanned or bursty workloads.

- Support for a wide range of programming languages, including .Net, Python, Scala, and others.

- Integrated data science, ETL, and data visualization in addition to data warehousing, with an option for no code ETL.

- Seamlessly switch between Apache Spark and SQL to explore and analyze files stored in the data lake.

- Azure Synapse Pipelines is a cloud-supported service for ETL (Extract, Transform, Load) and data integration. It enables the development of data-oriented workflows for coordinating large-scale data transfer and transformation.

The availability of cloud data warehouses makes data warehousing much simpler, convenient, and accessible to a broader range of businesses. However, before you rush into the setup of your cloud data warehouse, you should understand that managing a data warehouse in the cloud comes with a whole new set of challenges, regardless of whether you have managed an on-premise setup before.

This is where our experienced Azure data engineers help our clients who want to switch from on-prem to a cloud-based data warehouse. On-premise data warehouses are increasingly costly. Our team helps them migrate to Azure Synapse Analytics and mitigate challenges during and post-migration.

In certain scenarios where high throughput was required to pull petabytes of data from our client’s database for reporting. We used the architecture in Azure Synapse Analytics to enable extremely high-performance query execution for reporting. We also help clients achieve faster insights because the distributed architecture of Azure Synapse provides performance unmatched by any legacy database architecture.

Azure Synapse Best Practices

To enable you to better prepare for managing a data warehouse in the cloud, below are some best practices for using Azure Data Warehouse to consider.

1. Performance with Proper Distribution

The distribution of tables is one of the powerful features that contribute to the performance of SQL queries on the compute nodes. There are three types of distribution in Azure SQL Data Warehouse:

- Hash-distribution tables – A hash-distributed table can deliver the highest query performance for joins and aggregations on large table columns. The hash function uses the values in the distribution column to assign each row to a distribution.

- For example, a unique column is always preferred for hash distribution, resulting in optimum performance. However, for large tables, performing a hash distribution is recommended. Also, when you join two tables, the distribution of both tables should be in the same column for the best performance.

- Round-robin tables – A round-robin table distributes data evenly across the table but without any further optimization. A round-robin table stores data fast, but query performance can often be improved with hash-distributed tables. Joins on round-robin tables require reshuffling data, which can degrade the performance of the query. In scenarios when you need to store data in tables but none of the columns are qualified for the distribution, the round-robin tables method can be used.

- Replicated tables – It is a table that is replicated and caches a full copy of the table on each compute node. However, this reduces the DMS operation. Replicated tables are best utilized and only recommended for small tables. For example, a lookup table can be replicated for faster availability in joins and aggregations.

2. Selection of Data Warehouse Unit (DWU)

Azure SQL Data Warehouse CPU, memory, and IO are bundled into units of compute scale called Data Warehouse Units (DWUs). A change to your service level alters the number of DWUs that are available to the system, which in turn adjusts the performance and cost of your system.

SQL Data Warehouse has two generations – Gen1 and Gen2. We recommend Gen2 to leverage the best performance from SQL Data Warehouse as Gen2 provides 2.5x more memory per query than Gen1, enabling you to scale up to 30,000 DWUs and have unlimited columnar storage.

Both generations measure DWUs as below:

- Gen1 data warehouses are measured in DWUs.

- Gen2 data warehouses are measured in computing DWUs (cDWUs).

Parameters that need to be checked before selecting a DWU are below:

- Number of compute nodes required for processing

- Number of distributions per compute node

- Memory required per data warehouse

- Maximum concurrent queries to be executed; consider execution sharing among resource classes like small, medium, large, and small resource class

3. Fast Data Uploading Strategy

Here are the 4 ways to upload data faster:

- Push prepared data in Azure Blob Storage

- Pull data into the external table (polybase)

- Move data by some transformation into staging tables

- Merge the data of staging with the production tables

Clustered Columnstore indexes are now offered by default to enable compression with fast query execution.

4. Reduce Cost by Writing an Optimized SQL Query

Write an optimized query to pull data. Also, the query should be memory efficient with minimum execution time. A wrong query method can spike the billing of the data warehouse. And you must select only the required columns to save memory. For example, if two tables need to be joined, they must be joined on the same distribution key with proper filters; otherwise, the query execution may go under suspended/running status for a long time, which will lead to additional costs.

5. Use Smaller Resource Classes to Increase Concurrency, and Larger for Query Performance

- More parallelism can be achieved by using a smaller resource class

- Certain queries like large joins, indexing, heavy loads on clustered column store tables, and others will benefit from larger memory allocations, which are available with the large resource group. A large resource group user should not be used in every scenario as it would lead to a queue for other queries that will be blocked until the large resource group user query gets executed.

6. Use Create Table as Select (CTAS) to Enhance Query Performance and Joins

CTAS is one of the most important T-SQL features available in Azure SQL Data Warehouse. CTAS is a parallel operation that creates a new table based on the output of a SELECT statement. It is the simplest and fastest way to create and insert data into a table with a single command. For example, to join multiple tables that have separate distributions, CTAS can be used to create an intermediate table with the distribution of the third table, and then this intermediate table can be joined with the third table to optimize performance.

Learn more about AArete’s Data & Analytics solutions