A Look at AWS Glue – Simplify Your ETL & Data Transfers on the Cloud

Learn how AWS Glue can help you automate time-consuming data preparation processes and run your ETL jobs on a fully managed scalable Apache Spark environment.

As organizations move to the cloud, so does their transactional data. Firms with a hybrid of on-prem and cloud based databases, lakes, and warehouses have had to get creative in extracting, transforming, and loading (ETL) this transactional data: pulling the data with on-prem ETL tools and then pushing back into the cloud. Emerging cloud-based ETL tools are trying to address this awkward arrangement. And for companies moving to AWS, Glue is a compelling choice. Here’s why.

What is AWS Glue?

When performing ETL processes, developers only sparingly use ETL tools because they involve error-prone manual coding. Teams are responsible to discover the data, convert it to the desired format, map it on the cluster, schedule jobs and then test them. AWS Glue can solve these challenges.

AWS Glue is a managed extract, transform, load (ETL) service that moves data among various data stores. The service generates ETL jobs on data and handles potential errors, creating Python code to move data from source to destination using Apache Spark. AWS Glue removes potential issues with hand-coding ETL tasks, as subsequent changes to data format, volume and target schemas require frequent manual revisions to code. The AWS Glue service has three components:

- Data Catalog: Data catalog is a managed service that lets you store, annotate, and share metadata in the AWS Cloud in the same way you would in an Apache Hive metastore. Each AWS account has one AWS Glue Data Catalog. It provides a uniform repository where disparate systems can store and find metadata to keep track of data in data silos, and use that metadata to query and transform the data.

- Job Authoring: This enables AWS Glue to generate Python/Scala code to move data from its source to its destination using the underlying Spark implementation. With the latest updates, Glue now supports running Scala Spark code.

- Job Execution: Serverless job executions. Developers don’t need to deploy, configure or provision servers for AWS Glue. Jobs automatically run in a Spark environment.

The ETL scripts from Glue can handle both semi-structured and structured data. If AWS Glue encounters bad data, it places error rows in separate Simple Storage Service (S3) buckets instead of allowing the job to crash. If a crash occurs, the job continues from the point where it stopped; therefore, no data duplicates, unlike other ETL tools that have this problem.

With Glue, you can construct the ETL in just four steps:

- Crawl and catalog your data

- Specify mappings to generate scripts

- Explore your data interactively

- Schedule jobs to be run

How Data Catalog Works in AWS Glue

A data catalog is a concept in the Big Data space. The different types of data catalog users fall into three buckets — the data consumers (data and business analysts), data creators (data architects and database engineers), and data curators (data stewards and data governors).

A data catalog’s purpose is multifold. At its core a data catalog centralizes metadata. To be considered effective, a data catalog must –

- Centralize all information on the data in one location — meaning the structure, quality, definitions, and usage of the data should be easily accessible from one centralized location.

- Allow for self-service for end users — meaning context of the data is already provided via conversations and articles. On the off chance the user still does not understand the dataset, the expert behind the data should be listed.

- Auto-populate itself to ensure consistency and accuracy.

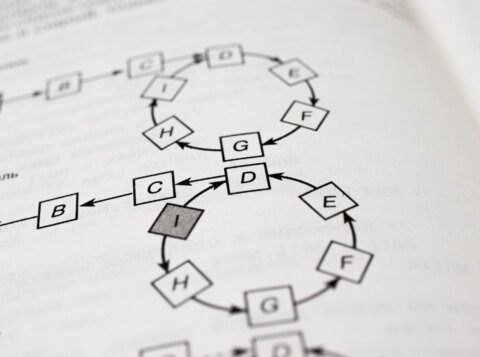

Data Cataloging Process Flow

(Numbers below correspond to diagram above)

Item 1

AWS Glue crawlers help discover and register the schema for datasets in the AWS Glue Data Catalog. The crawlers go through your data, and inspect portions of it to determine the schema. In addition, the crawler can detect and register partitions. As a first step, crawlers run any custom classifiers that you choose to infer the schema of your data. You can provide the code for custom classifiers, and they can run in the order that you specify.

Item 2

If no custom classifier matches your data’s schema, built-in classifiers try to recognize your data’s schema. AWS Glue provides built-in classifiers for various formats, including JSON, CSV, web logs, and many database systems.

For example, if you run a crawler on CSV files stored in S3, the built-in CSV classifier parses CSV file contents to determine the schema for an AWS Glue table. This classifier checks for the following delimiters:

- Comma (,)

- Pipe (|)

- Tab (\t)

- Semicolon (;)

- Ctrl-A (\u0001)

- Ctrl-A is the Unicode control character for Start Of Heading.

Item 3

The crawler connects to the data store. Some data stores require connection properties for crawler access.

Item 4

The inferred schema is created for your data.

Item 5

The crawler writes metadata to the Data Catalog. A table definition contains metadata of your data in your data store. The table is written to a database, which is a container of tables in the Data Catalog. Attributes of a table include classification, which is a label created by the classifier that inferred the table schema.

AWS Glue Use Case – Run queries on S3 using Athena

Query your S3 files using Athena and Glue

When to use AWS Glue

- To build a data warehouse to organize, cleanse, validate, and format data

- To run serverless queries against your Amazon S3 data lake

- To create ETL-driven pipelines using AWS Glue

- To understand your data assets

The Benefits of AWS Glue

AWS Glue can provide organizations with multiple benefits, including no server maintenance, cost savings by avoiding over-provisioning or under-provisioning resources, support for data sources including easy integration with Oracle and MSSQL data sources, and AWS Lambda integration.

AWS Glue natively supports data stored in Amazon Aurora, Amazon RDS for MySQL, Amazon RDS for Oracle, Amazon RDS for PostgreSQL, Amazon RDS for SQL Server, Amazon Redshift, and Amazon S3, as well as MySQL, Oracle, Microsoft SQL Server, and PostgreSQL databases in your Virtual Private Cloud (Amazon VPC) running on Amazon EC2. The metadata stored in the AWS Glue Data Catalog can be readily accessed from Amazon Athena, Amazon EMR, and Amazon Redshift Spectrum.